In this chapter, we are going to explore the phenomenon of Anderson localization in disordered systems in order to see how Krylov subspace methods can aid with studying this phenomenon in the limit of large systems.

import numpy as np

from IPython.display import Image, display

import matplotlib.pyplot as plt

%matplotlib inline

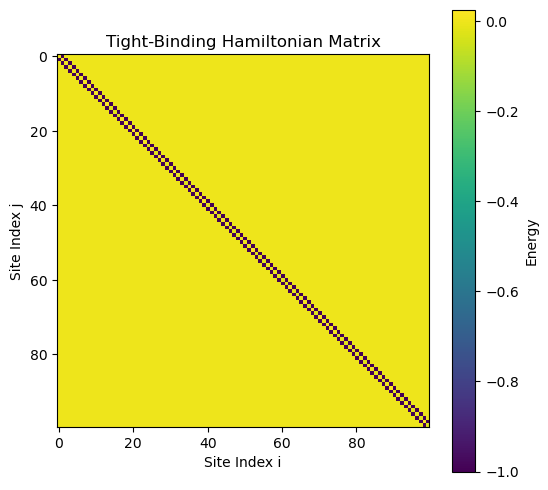

The tight-binding Hamiltonian¶

In a crystal with weakly overlapping orbitals, electrons hop between neighboring sites with a rate given by the binding strength . Static impurities at each shift the on-site energies (), giving rise to the tight-binding model

, where the vector is a unit vector localized on site , describing the case where an electron is exactly localized at . The first summation over all sites gives the on-site energy of an electron at site , and it corresponds to an identity matrix. The second summation gives the hopping terms between connected sites, where is the adjacency matrix of the lattice (i.e., if sites and are connected, and otherwise). Because we have defined a separate on-site term, by convention. For the particular case where the sites are arranged in a one-dimensional chain, the adjacency matrix has off-diagonal terms , and the Hamiltonian matrix is tridiagonal.

The Hamiltonian therefore has the form of an matrix, which gives the probability amplitude for an electron to hop from site to . In the standard version of this model, the on-site energies are drawn from a uniform distribution , where is the disorder strength.

Implementing the tight-binding Hamiltonian in Python¶

Below, we implement this case as a Python object. We store the physical parameters as instance attributes assigned when the object is initialized. We define a single method, energy that materializes the full Hamiltonian matrix. We add a decorator @property, which tells Python to treat the method as an attribute, so that we can call it as H.energy instead of H.energy(). This is a common Python idiom for attributes that are expensive to compute, but which we want to appear as simple attributes. It represents an example of lazy evaluation, where the value is only computed when it is needed. By default, Python does not cache outputs marked with @property, so the matrix will be re-computed each time it is accessed. However, advanced decorators such as @functools.cached_property can be used to cache the output after the first computation.

class TightBindingModel:

def __init__(self, J, w, n, random_state=None):

self.J = J

self.w = w

self.n = n

self.random_state = random_state

np.random.seed(self.random_state)

self.epsilon = np.random.uniform(-self.w / 2, self.w / 2, self.n)

@property

def energy(self):

H = np.zeros((self.n, self.n))

for i in range(self.n):

H[i, i] = self.epsilon[i]

if i < self.n - 1:

H[i, i + 1] = -self.J

H[i + 1, i] = -self.J

return H

tbh = TightBindingModel(J=1, w=0.05, n=100, random_state=0)

plt.figure(figsize=(6, 6))

plt.imshow(tbh.energy, cmap='viridis', interpolation='nearest')

plt.title('Tight-Binding Hamiltonian Matrix')

plt.colorbar(label='Energy')

plt.xlabel('Site Index i')

plt.ylabel('Site Index j')

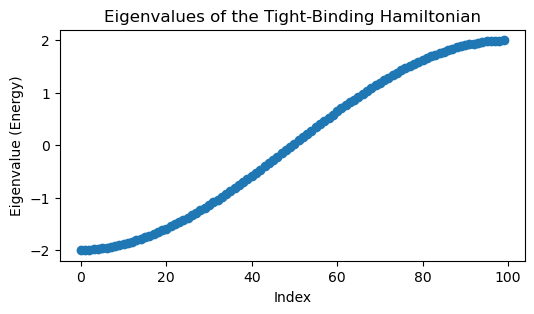

The spectrum of the matrix gives the energy levels of the system, and the eigenvectors give the wavefunctions of the electrons associated with that energy level,

where is the energy of the th eigenstate, and is the corresponding eigenvector. The eigenvectors are normalized such that . To convert a wavefunction into a probability distribution, we take the square of the coefficients, , which gives the probability of finding an electron in state at site .

eigvals, eigvecs = np.linalg.eigh(tbh.energy)

plt.figure(figsize=(6, 3))

plt.plot(eigvals, 'o')

plt.title('Eigenvalues of the Tight-Binding Hamiltonian')

plt.xlabel('Index')

plt.ylabel('Eigenvalue (Energy)')

Notice how all of the eigenvalues, and thus energies, are real, as we would expect for a physical system. This is because is a symmetric matrix, and so real eigenvalues are guaranteed by the spectral theorem.

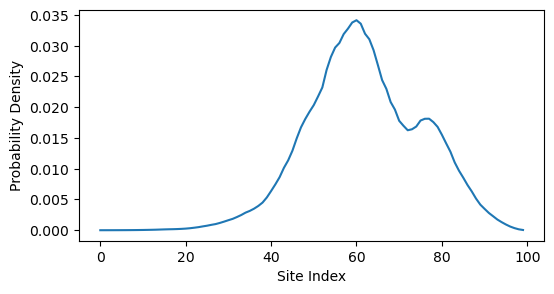

We are particularly interested in the low-temperature state of this system, which is the eigenvector associated with the lowest eigenvalue of . This eigenvector tells us how an electron would be distributed across the sites in the ground state of the system.

min_index = np.argmin(eigvals)

plt.figure(figsize=(6, 3))

plt.plot(eigvecs[:, min_index] ** 2)

plt.ylabel('Probability Density')

plt.xlabel('Site Index')

What happens if we increase the disorder in the system?

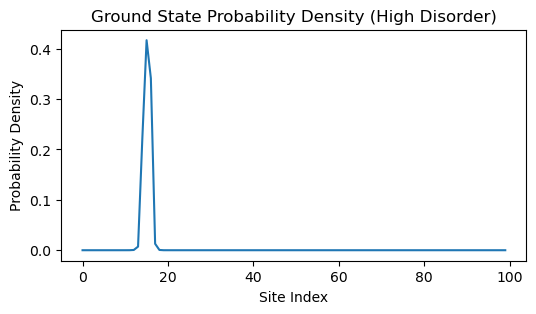

tbh = TightBindingModel(J=1, w=5, n=100, random_state=0)

eigvals, eigvecs = np.linalg.eigh(tbh.energy)

min_index = np.argmin(eigvals) # integer

plt.figure(figsize=(6, 3))

# eigvals[min_index]

plt.plot(eigvecs[:, min_index] ** 2)

plt.ylabel('Probability Density')

plt.xlabel('Site Index')

plt.title('Ground State Probability Density (High Disorder)')

We can see that, increasing the disorder strength causes the eigenvector to become more localized around a few sites. This is a hallmark of Anderson localization, where disorder in the system causes electrons to become trapped in certain regions rather than being able to move freely throughout the material.

Anderson localization on complex networks¶

Let’s now try to study Anderson localization in a larger system. Rather than continue the case where the sites are arranged in a 1D chain, let’s now consider a system with a more complex topology. We’ll start with a 1D chain, but we will introduce random long-range connections between sites by randomly choosing pairs of sites and connecting them with probability . This results in a disordered “small-world” network, which is more representative of real materials where electrons can hop between distant sites due to impurities or defects.

Note that there are now two sources of quenched disorder in our problem: the random on-site energies and the random long-range connections between sites.

class TightBindingRewiring(TightBindingModel):

def __init__(self, p, *args, **kwargs):

super().__init__(*args, **kwargs)

self.p = p

@property

def energy(self):

H = super().energy

for i in range(self.n):

for j in range(i + 2, self.n):

if np.random.rand() < self.p:

H[i, j] = -self.J

H[j, i] = -self.J

return H

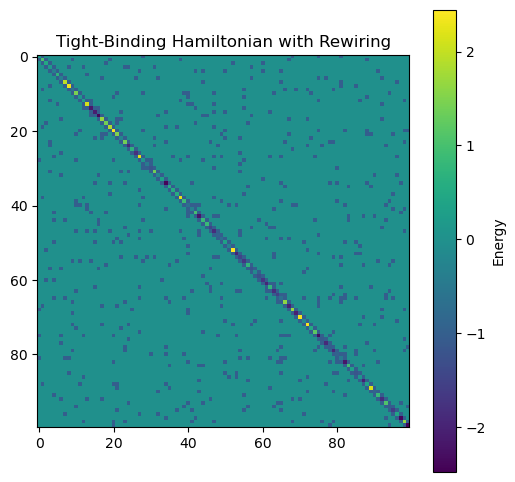

H = TightBindingRewiring(J=1, w=5, n=100, p=0.05, random_state=0)

plt.figure(figsize=(6, 6))

plt.imshow(H.energy, cmap='viridis', interpolation='nearest')

plt.title('Tight-Binding Hamiltonian with Rewiring')

plt.colorbar(label='Energy')

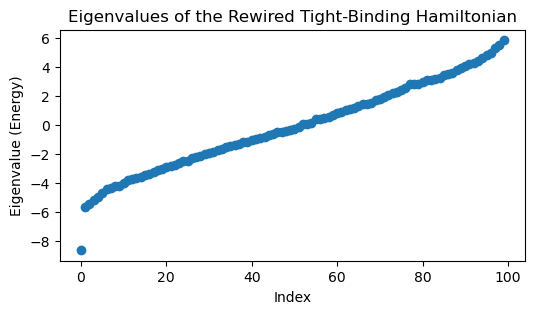

eigvals, eigvecs = np.linalg.eigh(H.energy)

plt.figure(figsize=(6, 3))

plt.plot(eigvals, 'o')

plt.title('Eigenvalues of the Rewired Tight-Binding Hamiltonian')

plt.xlabel('Index')

plt.ylabel('Eigenvalue (Energy)')

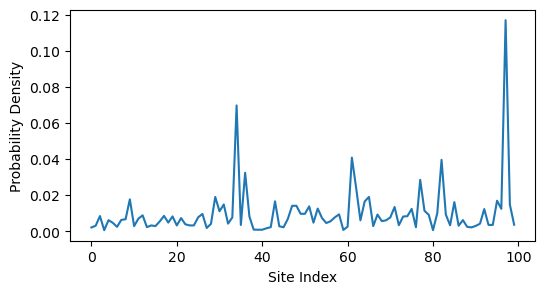

min_index = np.argmin(eigvals)

plt.figure(figsize=(6, 3))

plt.plot(eigvecs[:, min_index] ** 2)

plt.ylabel('Probability Density')

plt.xlabel('Site Index')

We can see that random rewiring disrupts the localized state. This is because long-range connections alter the topology of the network; the wavefunction may localize on the graph itself, but the graph is no longer embedded in a simple 1D space. In fact, the localized nodes map onto a community within the network, similar to our previous spectral graph theory example.

What happens if we try to scale up our calculation to a much larger system? Let’s try repeating our calculation with sites.

H = TightBindingRewiring(J=1, w=0.5, n=10000, p=0.05, random_state=0)

eigvals, eigvecs = np.linalg.eigh(H.energy)On most hardware, this calculation will not complete in a reasonable amount of time. The problem is that the Hamiltonian matrix is now . Finding the full eigenvalue spectrum thus requires operations, which is infeasible on a standard laptop. However, we suspect that we are wasting a lot of time computing eigenvalues and eigenvectors that we don’t care about. Because we are only interested in the lowest energy eigenvalue and its associated eigenvector, rather than the full spectrum.

Concentration of measure in high dimensions¶

To understand how we might accelerate our eigenspace calculation, we can gain some intuition from the field of high-dimensional geometry. In high-dimensional spaces, many counterintuitive phenomena arise due to the so-called “concentration of measure” effect. This effect states that in high-dimensional spaces, most of the volume of a shape is concentrated in a thin shell near its surface.

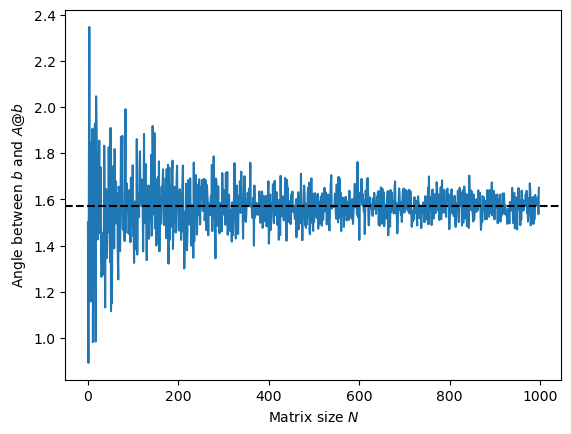

Below, we generate a random Hermitian matrix of increasing size , and we also generate a random vector . We compute the angle between the vector and the vector as the size of the space increases.

all_angles = list()

for n in range(2, 1000):

## Create a random Hermitian matrix

A = np.random.normal(size=(n, n))

A = A.T + A

b = np.random.normal(size=(n,))

bprime = A @ b

all_angles.append(

np.arccos(np.dot(b / np.linalg.norm(b), bprime / np.linalg.norm(bprime)))

)

plt.plot(all_angles)

plt.axhline(np.pi/2, 0, 1000, color='k', linestyle='--')

plt.xlabel('Matrix size $N$')

plt.ylabel('Angle between $b$ and $A @ b$')

The angle quickly converges to , indicating that the vectors and are nearly orthogonal in high dimensions. This is a manifestation of the concentration of measure effect, where random vectors in high-dimensional spaces tend to be nearly orthogonal to each other. The fact that is nearly orthogonal to suggests that the large matrix rotates the vector in a fully random direction.

What does this mean, in practice? If we are given a matrix and a vector in high dimensions, then we can find orthogonal basis directions quickly with individual matrix-vector products.

Krylov subspace methods¶

Many iterative algorithms for numerical linear algebra involve repeatedly multiplying a matrix by a vector in order to build up a solution gradaully. For example, the QR eigenvalue algorithm repeatedly multiplies a matrix by an matrix corresponding to an orthogonal basis set of vectors, while the power method repeatedly multiplies a matrix by a vector.

Our choice of target vector and the matrix operator jointly define the Krylov subspace of the pair

The subspace in question refers to the span of the vectors , which is a subspace of full the column span of . While the full column span is a property of alone, the specific subspace depends on . When the number of columns in is less than the number of rows () the rank of the Krylov subspace can range from 1 to . The +1 comes from the special case where is not in the column span of . However, when is a full-rank square matrix, then .

We store the vectors spanning the Krylov subspace in a matrix , where each is a column vector and . Each iteration adds a basis element to this set, and thus increases the number of columns in by one.

Properties of the Krylov subspace¶

There are a few key properties of Krylov subspaces, around which we can build iterative algorithms:

Shifting. Applying the matrix to the Krylov subspace performs a shift on the Krylov subspace: .

Rank. If , then the Krylov subspace is rank-deficient, and so there are linearly dependent vectors in the column span of the subspace matrix .

Span. If is a full-rank matrix, then is also a full-rank matrix.

The main reason that we are interested in Krylov subspace methods is because we can perform cheap matrix-vector products () to extend the space. Often, the matrix encodes our dataset, simulation, or other fixed set of constraints, and the vector encodes our initial guess, prior knowledge, or an error term that we seek to minimize.

Common examples of Krylov subspace methods include the power method and inverse methods for finding the largest and smallest eigenvalues of matrices, and the Lanczos algorithm for finding eigenvalues and eigenvectors of a large sparse matrix, and the GMRES, MINRES, and conjugate gradient method for solving systems of linear equations.

Testing the properties of Krylov subspaces¶

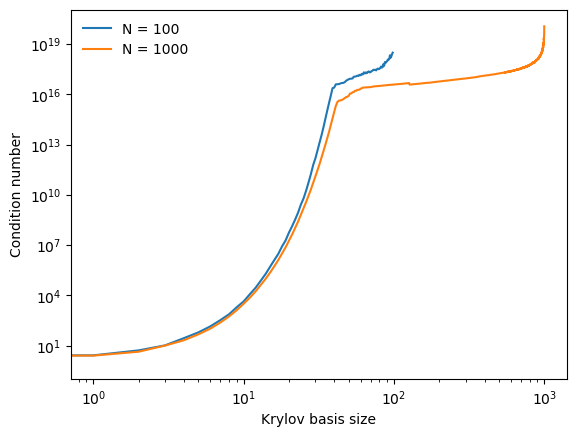

In the code below, we explore the structure of Krylov subspaces when and then when . We generate a random symmetric matrix and a random vector , and we iteratively build up the Krylov subspace by repeatedly multiplying by the current basis vector. At each iteration, we measure the condition number of the Krylov subspace matrix A high condition number indicates that the matrix is close to being rank-deficient, while a low condition number indicates that the matrix is well-conditioned, with full rank and nearly-orthogonal columns.

all_condition_numbers = list()

for nval in [100, 1000]:

# nval = 200

## Create a random Hermitian matrix

np.random.seed(1)

A = np.random.normal(size=(nval, nval))

A = A.T + A

## Create a random starting basis vector

b = np.random.normal(size=(nval,))

b /= np.linalg.norm(b)

krylov_basis = [b]

condition_numbers = list()

for i in range(1, nval):

## Multiply the last vector in the Krylov basis by A

krylov_i = A @ krylov_basis[-1]

krylov_i /= np.linalg.norm(krylov_i)

krylov_basis.append(krylov_i)

condition_numbers.append(np.linalg.cond(np.array(krylov_basis)))

krylov_basis = np.array(krylov_basis)

condition_numbers = np.array(condition_numbers)

print(f"N = {nval} Condition numbers: {", ".join(f"{x:.2f}" for x in condition_numbers[:2])} ,... {", ".join(f"{x:.2e}" for x in condition_numbers[-2:])}")

plt.loglog(condition_numbers, label=f"N = {nval}")

all_condition_numbers.append(condition_numbers)

plt.legend(frameon=False)

plt.xlabel('Krylov basis size')

plt.ylabel('Condition number')

N = 100 Condition numbers: 1.00, 2.62 ,... 2.53e+18, 3.10e+18

N = 1000 Condition numbers: 1.02, 2.53 ,... 3.90e+19, 1.16e+20

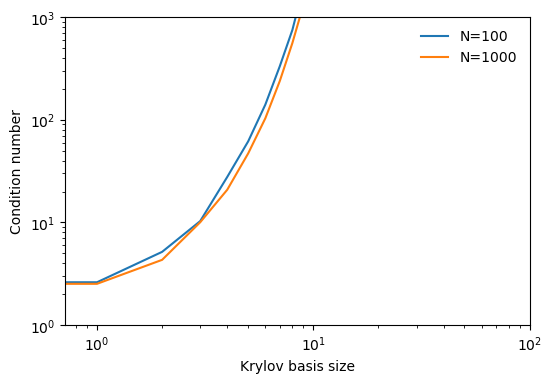

plt.figure(figsize=(6, 4))

plt.plot(all_condition_numbers[0], label='N=100')

plt.plot(all_condition_numbers[1], label='N=1000')

plt.yscale('log')

plt.xscale('log')

plt.xlim([0, 100])

plt.ylim([1, 1e3])

plt.legend(frameon=False)

plt.xlabel('Krylov basis size')

plt.ylabel('Condition number')/var/folders/79/zct6q7kx2yl6b1ryp2rsfbtc0000gr/T/ipykernel_26650/1742798867.py:6: UserWarning: Attempt to set non-positive xlim on a log-scaled axis will be ignored.

plt.xlim([0, 100])

We can see that the condition number of the higher-dimensional Krylov subspace initially grows more slowly than the lower-dimensional case, though it will eventually surpass it because it can be iterated for a larger number of steps. This slower initial growth is a consequence of the concentration of measure phenomenon, because the early basis vectors are more likely to be orthogonal to each other, and so the condition number is lower.

However, at the end stages of the Krylov iteration, the condition number grows larger for the higher-dimensional case. We know from previous chapters that the condition number of a random matrix is due to the birthday paradox. However, we see instead that the condition number is much, much higher. This implies that the Krylov subspace is saturating onto a lower-dimensional subspace than , resulting in vectors that are closer to parallel than would be expected in the case where each vector is sampled entirely randomly. We thus suspect that, in high dimensions, the dynamics of are more constrained than random sampling would imply.

What does the Krylov subspace look like?¶

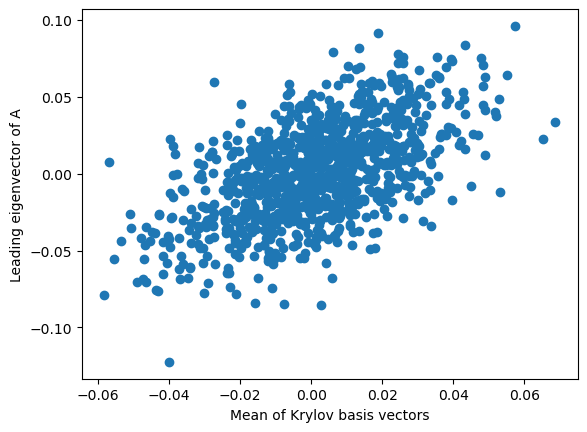

To help understand the shape of the Krylov subspace, we take the elementwise average of the entire Krylov basis set, resulting in a single -dimensional vector. We compare this to the eigenvector associated with the largest eigenvalue of .

# Find the true eigenvalues and eigenvectors and sort in descending order of eigenvalue magnitude

eigenvalues, eigenvectors = np.linalg.eigh(A)

sorted_indices = np.argsort(eigenvalues)[::-1]

eigenvalues = eigenvalues[sorted_indices]

eigenvectors = eigenvectors[:, sorted_indices]

# Find the average of the Krylov basis vectors

average_krylov_element = np.mean(krylov_basis, axis=0)

plt.plot(average_krylov_element, eigenvectors[:, 0], 'o')

plt.xlabel('Mean of Krylov basis vectors')

plt.ylabel('Leading eigenvector of A')

We can see that the Krylov subspace is concentrated around the largest eigenvector of . This is a consequence of the power method, which itself states that linear dynamical systems asymptotically pull points onto a lower-dimensional subspace associated with the largest eigenvalue. But what about the other eigenvectors? We have an Krylov subspace, as well as a set of eigenvectors of . How do these two sets of vectors relate to each other?

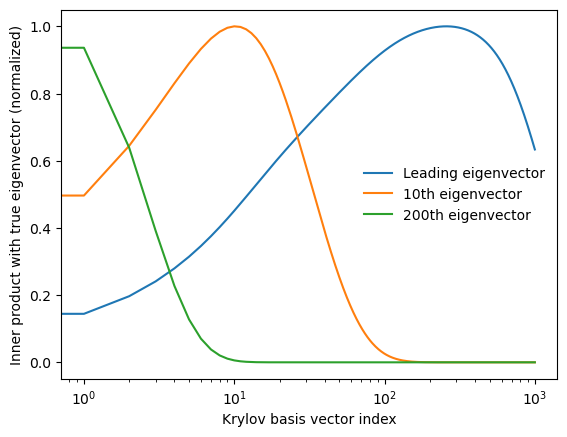

To test this relationship, we compute the dot product between each eigenvector and each Krylov basis vector, resulting in an matrix of dot products. Since both bases consist of normalized unit vectors, we can interpret the dot products as cosines of angles between the vectors, or, equivalently, the Pearson correlation between each element of the Krylov subspace and each true eigenvector.

# Find the inner product of the ith Krylov basis vector with the jth eigenvector

inner_product = krylov_basis @ eigenvectorsplt.semilogx(np.abs(inner_product)[:, 0] / np.max(np.abs(inner_product)[:, 0]), label='Leading eigenvector')

plt.semilogx(np.abs(inner_product)[:, 10] / np.max(np.abs(inner_product)[:, 10]), label='10th eigenvector')

plt.semilogx(np.abs(inner_product)[:, 200] / np.max(np.abs(inner_product)[:, 200]), label='200th eigenvector')

plt.legend(frameon=False)

plt.xlabel('Krylov basis vector index')

plt.ylabel('Inner product with true eigenvector (normalized)')

We can see that each true eigenvector is associated with a different timescale in the Krylov iteration. Less important eigenvectors associated with smaller eigenvalues only correlate with the Krylov basis vector early on, but the most important eigenvectors associated with the largest eigenvalues correlate with later Krylov basis vectors. The Krylov basis therefore gradually squeezes directions out of the system, resulting in a low-dimensional subspace associated with the largest eigenvalues of .

Arnoldi iteration as a Krylov method¶

Arnoldi iteration is an eigenvalue and eigenvector algorithm that uses the Krylov subspace to iteratively construct an orthogonal basis for a matrix . It is similar to the QR eigenvalue algorithm, but it allows us to stop after the first terms of the factorization of the full matrix .

Conceptually, Arnoldi blends aspects of Gram-Schmidt with the power method. Rather than finding eigenvalues by repeatedly applying QR to a matrix , we instead repeatedly apply to a vector , and then orthogonalize the resulting vector against all previous basis vectors. This allows us to find leading eigendirections within a single Gram-Schmidt sequence.

The Arnoldi algorithm¶

Choose a random starting vector of length and normalize it to have unit length

For :

Find the next candidate Krylov subspace element by computing .

For :

Compute the projection of each prior subspace vector onto the new element

Perform a single Gram-Schmidt update

Compute the normalization constant

Compute the final new basis element

Return and

The matrix is an upper Hessenberg matrix, which is nearly upper triangular but has an additional subdiagonal. The eigenvalues of are called the Ritz values of , and they approximate the eigenvalues of . The eigenvectors of can be used to construct approximate eigenvectors of by multiplying them by the Krylov basis matrix .

In the code below, we implement the Arnoldi algorithm as a Python function. We test it on a random symmetric matrix and compare the eigenvalues and eigenvectors returned by Arnoldi iteration to those computed with NumPy’s built-in np.linalg.eig function.

Ordering the Arnoldi algorithm¶

As we saw previously for Gram-Schmidt, this approach does not necessarily give us the eigenvectors in order of decreasing eigenvalue and thus relevance to our problem. To fix this, we can manipulate the starting direction of the iteration by exploiting some properties of high-dimensional vector spaces. The first will be to use a warm start. Rather than picking a randomly-oriented starting vector , we will instead pick a starting vector aligned with the average bearing direction of the column space.

We then immediately construct an order Krylov subspace, starting from this vector. We know that dynamical systems tend to concentrate around the leading eigenvector, and so we orthogonalize with Gram-Schmidt in the reverse order of the original iteration, so that the first eigenvector (the one around which we orthogonalize first) is the one associated with the largest eigenvalue. This is a simple modification to the Arnoldi algorithm, but it results in a much more useful ordering of the eigenvectors.

class Arnoldi:

"""

Arnoldi iteration to find an orthonormal basis for the Krylov subspace of a

Hermitian matrix A.

"""

def __init__(self, random_state=None, store_history=False):

self.random_state = random_state

np.random.seed(self.random_state)

self.store_history = store_history

if self.store_history:

self.history = list()

def solve(self, A, k):

"""

Perform Arnoldi iteration to find an orthonormal basis for the Krylov subspace

of the Hermitian matrix A.

Args:

A (np.ndarray): Hermitian matrix of shape (n, n).

k (int): Number of Krylov basis vectors to compute.

Returns:

np.ndarray: Orthonormal basis for the Krylov subspace of shape (n, k).

"""

n = A.shape[0]

H = np.zeros((k, k))

## Warm start using the column average

q = np.mean(A, axis=0)

q /= np.linalg.norm(q)

self.krylov_basis = [q]

## Now run Gram-Schmidt orthogonalization

for i in range(1, k):

vi = A @ self.krylov_basis[i - 1].copy()

hi = np.array(self.krylov_basis)[:i] @ vi # Project new vector onto existing basis

vi -= hi @ np.array(self.krylov_basis)[:i] # Gram-Schmidt orthogonalization

qi = vi / np.linalg.norm(vi) # Normalize final vector

self.krylov_basis.append(qi)

if self.store_history:

self.history.append(np.array(self.krylov_basis[:i+1]))

return np.array(self.krylov_basis).T

## Make a random Hermitian matrix and regression target

np.random.seed(0)

a_big = np.random.random((3000, 3000))

a_big = a_big.T + a_big

## Find only the first 5 Arnoldi vectors

arnoldi_vectors = Arnoldi(random_state=0).solve(a_big, 5)

## Get numpy eigenvalues and eigenvectors and sort in descending order of eigenvalue magnitude

eigenvalues, eigenvectors = np.linalg.eigh(a_big)

sorted_indices = np.argsort(eigenvalues)[::-1]

eigenvalues = eigenvalues[sorted_indices]

eigenvectors = eigenvectors[:, sorted_indices]

## Compare numpy eigenvectors to Arnoldi vectors

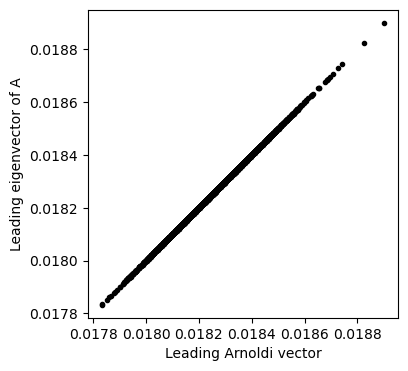

plt.figure(figsize=(4, 4))

plt.plot(arnoldi_vectors[:, 0], eigenvectors[:, 0], '.k')

plt.xlabel('Leading Arnoldi vector')

plt.ylabel('Leading eigenvector of A')

plt.axis('equal')(np.float64(0.017780210254918508),

np.float64(0.018953757843151453),

np.float64(0.01778003795677678),

np.float64(0.01895350553644324))

What is going on here?¶

The full QR factorization of a matrix can be written the form , where . is an orthogonal matrix, and so the case of full factorization corresponds to rotating the matrix into a basis where the eigenvectors are the columns. As in QR factorization, the matrix is upper triangular, and it is known as the Hessenberg matrix.

If we only want to compute the first eigenvalues of a matrix, then we we seek a low-rank approximation of . The partial basis , has the property

where is the first and elements of the full Hessenberg matrix . Our output is the reduced matrix . Each iteration finds a new basis vector for the corresponding rank- Krylov subspace. After stopping at our of interest, we can find the reduced Hessenberg matrix , where .

Finding subleading eigenvalues with the deflation method¶

The approach above works for our purposes because we are only interested in the leading eigenvector of the system. The subleading directions will be returned in an arbitrary order. We can extend this approach to a full reordering of the eigenvectors by applying the same logic again. After finding the leading eigenvector, we can project it out of the matrix using a rank-1 update, and then repeat the Arnoldi iteration on the modified matrix. This will return the second eigenvector, which we can again project out of the matrix, and so on. This approach is called the deflation method, and it is a common technique for finding multiple eigenvalues and eigenvectors of a matrix.

The key step that makes deflation work is that we can remove a subspace from the row and column space of a matrix. If we want to remove the leading eigenvector associated with eigenvalue , we perform the rank-1 update,

This step removes the first-order approximation of the matrix via the singular value decomposition of . The new matrix has the same eigenvectors as , but the leading eigenvector of is now the second eigenvector of . We can thus repeat the Arnoldi iteration on to find the second eigenvector, and so on. In order to deflate out multiple subspaces at once, we can once again invoke notation from the singular value decomposition. If we have already found eigenvectors, we can store them in a matrix , and their associated eigenvalues in a diagonal matrix . We can then perform the rank- update,

to remove the subspace spanned by the first eigenvectors.

The Rayleigh quotient estimates eigenvalues from eigenvectors¶

We cannot perform deflation with an estimate of the previous eigenvalues in descending order. It turns out that, after finding an eigenvector , we can estimate the associated eigenvalue using the Rayleigh quotient,

where the denominator is simply a normalization constant that we can safely ignore if is already unit vector.

Implementing the deflated power method¶

We know that the power method causes a random vector to converge to the leading eigenvector of a matrix . We will implement this procedure as a private method in our DeflatedPowerMethod class, because we will need to use it first on the original matrix , and then on the deflated matrix , and so on. Our implementation will start by finding the leading direction via power iteration, then find the associated eigenvalue via the Rayleigh quotient, and then deflate the matrix and repeat the process.

class DeflatedPowerMethod:

"""Compute the first k eigenvectors of a matrix A using the deflated power method.

Attributes:

random_state (int): Random seed for reproducibility.

store_history (bool): Whether to store the history of Krylov bases.

max_iter (int): Maximum number of iterations for the power method.

"""

def __init__(self, random_state=None, store_history=False, max_iter=100):

self.random_state = random_state

np.random.seed(self.random_state)

self.store_history = store_history

self.max_iter = max_iter

if self.store_history:

self.history = list()

def _power_method(self, A, q=None):

"""Given a a matrix A and an optional starting vector q,

use the power method to find the leading eigenvector of A.

Args:

A (np.ndarray): The input matrix.

q (np.ndarray, optional): The starting vector. If None, a random vector

is used.

Returns:

np.ndarray: The leading eigenvector of A.

"""

n = A.shape[0]

## If a warm start is not provided, pick a random direction

if q is None:

q = np.random.normal(size=(n,))

q /= np.linalg.norm(q)

for _ in range(self.max_iter):

q = A @ q

q /= np.linalg.norm(q)

return q

def solve(self, A, k):

"""Find the first k eigenvectors of A using the deflated power method.

Args:

A (np.ndarray): The input matrix.

k (int): The number of eigenvectors to compute.

Returns:

np.ndarray: The first k eigenvectors of A as columns of a matrix.

"""

# Warm start for first eigenvector using the columnwise average

q = np.mean(A, axis=0)

q /= np.linalg.norm(q)

# Power iteration converges to the leading eigenvector

q = self._power_method(A, q)

self.krylov_basis = [q]

self.krylov_eigenvalues = [q.T @ A @ q]

for r in range(1, k):

V = np.array(self.krylov_basis[:r]).T # (n, r)

lam = np.array(self.krylov_eigenvalues[:r]) # (r,)

A_deflated = A - V @ np.diag(lam) @ V.T # (n, n)

q = self._power_method(A_deflated)

self.krylov_basis.append(q)

self.krylov_eigenvalues.append(q.T @ A @ q)

return np.array(self.krylov_basis[:k]).T

## Make a random Hermitian matrix and regression target

np.random.seed(0)

a_big = np.random.random((1000, 1000))

a_big = a_big.T + a_big

## Find only the first 5 Arnoldi vectors

arnoldi_vectors = DeflatedPowerMethod(random_state=0, max_iter=500).solve(a_big, 10)

## Get numpy eigenvalues and eigenvectors and sort in descending order of eigenvalue magnitude

eigenvalues, eigenvectors = np.linalg.eigh(a_big)

sorted_indices = np.argsort(eigenvalues)[::-1]

eigenvalues = eigenvalues[sorted_indices]

eigenvectors = eigenvectors[:, sorted_indices]

## Compare numpy eigenvectors to Arnoldi vectors

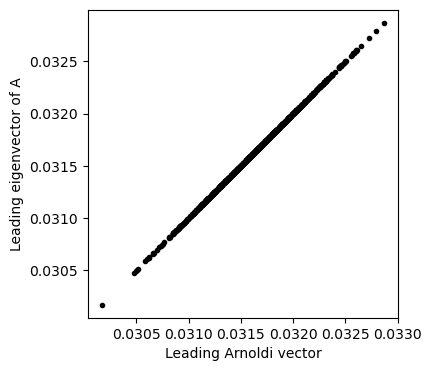

plt.figure(figsize=(4, 4))

plt.plot(arnoldi_vectors[:, 0], eigenvectors[:, 0], '.k')

plt.xlabel('Leading Arnoldi vector')

plt.ylabel('Leading eigenvector of A')

plt.axis('equal')(np.float64(0.03003694728064225),

np.float64(0.03300286284201122),

np.float64(0.030036947280642243),

np.float64(0.033002862842011225))

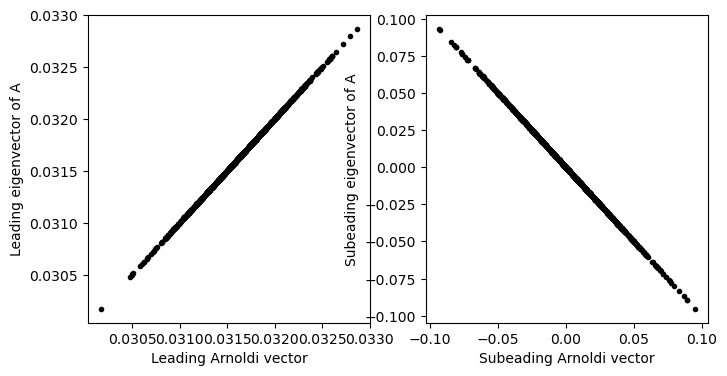

plt.figure(figsize=(8, 4))

plt.subplot(1, 2, 1)

plt.plot(arnoldi_vectors[:, 0], eigenvectors[:, 0], '.k')

plt.xlabel('Leading Arnoldi vector')

plt.ylabel('Leading eigenvector of A')

plt.subplot(1, 2, 2)

plt.plot(arnoldi_vectors[:, 1], eigenvectors[:, 1], '.k')

plt.xlabel('Subeading Arnoldi vector')

plt.ylabel('Subeading eigenvector of A')

# plt.axis('equal')

How does localization vary with system size?¶

Now that we have a faster method for computing spectra, we can return to our original problem of computing Anderson localization on a disordered network.

H = TightBindingRewiring(J=1, w=10, n=100, p=0.01, random_state=0)

# arnoldi_vectors = Arnoldi(random_state=0, store_history=True).solve(H.energy, 2) # what

arnoldi_vectors = DeflatedPowerMethod(random_state=0, max_iter=500).solve(H.energy, 2)

plt.figure(figsize=(6, 3))

plt.plot(arnoldi_vectors[:, 0] ** 2)

plt.ylabel('Probability Density')

plt.xlabel('Site Index')

# H = TightBindingRewiring(J=1, w=3, n=600, p=0.01, random_state=0)

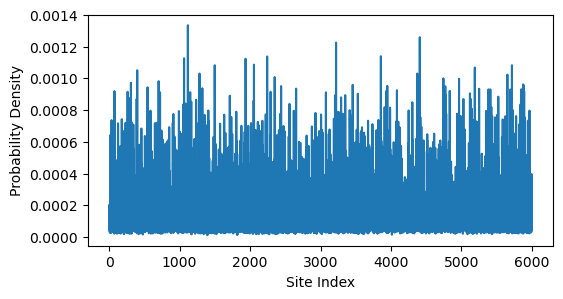

H = TightBindingRewiring(J=1, w=100, n=6000, p=0.01, random_state=0)

# arnoldi_vectors = Arnoldi(random_state=0, store_history=True).solve(H.energy, 50)

arnoldi_vectors = DeflatedPowerMethod(random_state=0, max_iter=500).solve(H.energy, 2)

plt.figure(figsize=(6, 3))

plt.plot(arnoldi_vectors[:, 0] ** 2)

plt.ylabel('Probability Density')

plt.xlabel('Site Index')

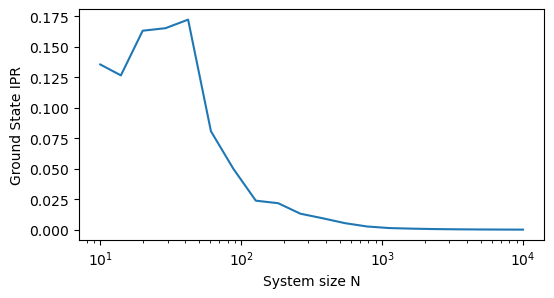

It looks like the eigenvector becomes more delocalized as increases. However, it could just appear this way because we are zooming out over more sites. To test this, we can compute the inverse participation ratio (IPR) of the eigenvector, which is a measure of localization that is independent of system size. The IPR is defined as

When this value is close to 1, the eigenvector is localized on a single site, while when it is close to , the eigenvector is delocalized across all sites. We can repeat our calculation for different system sizes and plot the IPR as a function of .

from numpy import astype

def inverse_participation_ratio(psi):

return np.sum(psi**4) / (np.sum(psi**2)**2)

## Logarithmically spaced sizes from 10^1 to 10^3

nvals = np.logspace(1, 4, 20).astype(int)

all_iprs = list()

for nval in nvals:

H = TightBindingRewiring(J=1, w=0.6, n=nval, p=0.005, random_state=0)

# eigvals, eigvecs = np.linalg.eigh(H.energy)

# min_index = np.argmin(eigvals)

# psi = eigvecs[:, min_index]

# psi = Arnoldi(random_state=0, store_history=True).solve(H.energy, 0)[:, 0]

arnoldi_vectors = DeflatedPowerMethod(random_state=0, max_iter=500).solve(H.energy, 2)

psi = arnoldi_vectors[:, 0]

inverse_participation_ratio(psi)

all_iprs.append(inverse_participation_ratio(psi))

plt.figure(figsize=(6, 3))

plt.semilogx(nvals, all_iprs)

plt.xlabel('System size N')

plt.ylabel('Ground State IPR')

This calculation confirms our intuition, that the system becomes more delocalized as the number of sites increaes. This is a common phenomenon in condensed matter physics, where larger systems tend to have more delocalized states due to the increased relative influence of long-range connections and disorder.

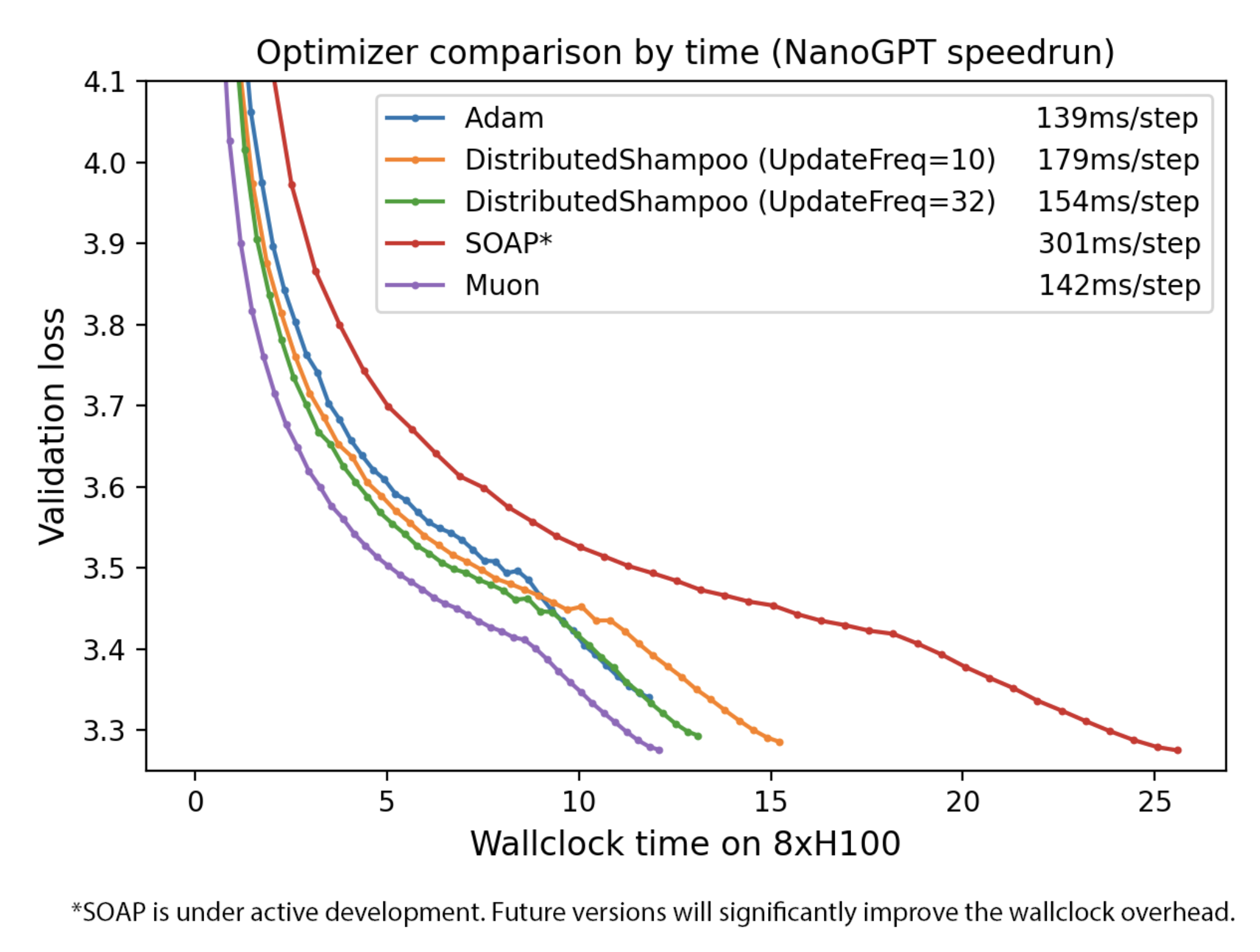

Applications of Krylov methods: orthogonal updates in optimizers¶

A typical modern neural network like DeepSeek-R1 has on the order of 1010 parameters. Training such a network requires computing gradients of the loss function with respect to each parameter. A typical network contains dense, or fully-connected, layers that store parameters as large matrices of weights. A modern weight matrix can reach sizes as large as or larger. In order to update these 106 free parameters during training, the matrix is typically updated elementwise based on an update matrix that has the same shape

where is the learning rate, and is encodes the changes to be made to each parameter. Typically, the matrix contains updates calculated separately for each element of , based on backpropagation, and so this aapproach updates each element of the matrix separately, and it does not take into account any structure in the matrix .

However, recent approaches to neural network training have explored the idea of updating the weight matrix in a way that preserves its shape. For example, instead of updating the weights using the matrix , we instead apply an orthogonal update,

where is an orthogonalized version of the update matrix . However, since can be a very large matrix, a key bottleneck is quickly computing from . This can be done using direct orthogonalization methods, as in the Muon optimizer, or it can be done using iterative Krylov methods like the power method, as in the Dion optimizer.

Image from Keller Jordon’s blog post on the Muon optimizer

- Anderson, P. W. (1958). Absence of Diffusion in Certain Random Lattices. Physical Review, 109(5), 1492–1505. 10.1103/physrev.109.1492

- Watts, D. J., & Strogatz, S. H. (1998). Collective dynamics of ‘small-world’ networks. Nature, 393(6684), 440–442. 10.1038/30918